Whenever things goes haywire on an OS or application there are two areas that are looked at first:

- Networks

- Storage

In a fair few cases the issue is founded to be external from the host systems but when it comes to performance related problems from a storage perspective the entire IO-stack is at play.

In support we often deal with issues on the array, switch and host level that do not surface until we take some deep look into logs, dumps and traces to try and find out what the problem is and what is causing it. In a fair few circumstances there is however nothing to see in the fabric or storage subsystem and we\’re in the hands of the server administrators to provide us with the proper information.

Old code

In many cases we see that firmware and drivers on the HBA level are so far behind current releases and are susceptible to bucketloads of issues. Lists with fixes spanning around 800 lines in releases notes is not new.

As an example below a few quick snippets out of a Qlogic driver release note (Emulex is not an exception though):

Qlogic driver version below 9.2.4.20:

* [ER140892]: Path Loss issue with Brocade switch FOS upgrade

Resolution: Added login retries to Name Server, postponing processing RSCN if Name Server is logged out

Scope : All Adapters

Or this one:

* [ER132526]: Path loss caused by missing PLOGI after FLOGI

Resolution: Clear RSCN information before ISP reset

Scope : 2500, 2600, 2700 Series Adapters

And this one:

* Added logic to trigger re-login to NS when receiving new RSCN if NS is still logged out after previous retries expire.

I\’m not saying the firmware and drivers are bad. Given that fact both the Qlogic (Marvel) and Emulex (Broadcom) guys are on top of things and fix issues as they come is a good thing.

Interoperability lists

The argument that we hear in support when things went wrong or if we advise to upgrade to a newer version of any part of the infrastructure is that \”these versions are not listed on an interoperability list and therefore we do not implement them.\” Obviously that decision is yours but when software is tested from an interoperability standpoint these do not include regression and corner-case testing. Updates in code pertaining a particular platform or hardware model don\’t suddenly make your environment stop working. You already know you have a problem when things stop working so installed code with a bunch of fixes can only make it better. Only when sheer new functionality is added or when drivers start supporting whole new platforms some sort of ensurance will be delivered by interoperability testing. If you make the decision to only have \”tested\” code-levels you only have the warranty that a plug-and-play scenario will work. It is impossible for vendors to go through every sort of sceanrio to see if, when and how their equipment and software holds up under all these different scenario\’s. Bugs are a fact of life and it\’s the art of systems-administration to maintain these systems to an optima-forma.

Tuning

Furthermore the driver configuration on many systems is an out-of-the-box setting which is only good if you do no IO at all. System and application administrators spend countless hours on tuning their side of the fence only to be blocked by an untuned IO stack. A major part of the IO stack sits in servers from the file-system downwards to the firmware of the HBA. We?re talking volume-managers, crypto-layer, MPIO, SCSI/NVMe, FCP/FC-NVMe, HBA driver and HBA firmware. All of these can have a major influence on how the IO response time as seen by the application is perceived. If it takes 5 ms from an IO request to hit the wire on its way to the array chances are that, no matter what the speed of the SAN and array is, the performance will be very poor.

The IO stack on hosts is in most occasions a very poorly designed and configured piece of the puzzle. Tuning and optimizing to the capabilities of the hardware can be a real game changer.

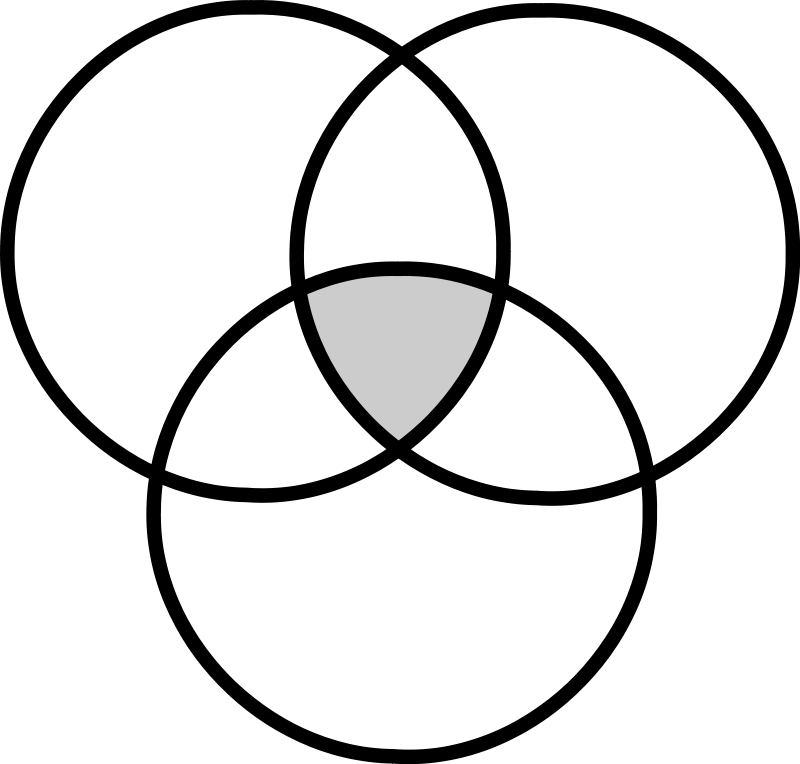

The Grey Area

As the topic shows the functional split between systems and storage administrators often done on a HBA – Switch boundary. As the HBA sits in the server it is often perceived as a server administrators responsibility when you ask storage admins. Server administrator very often neglect this part of the puzzle due to mainly two factors, they either don\’t know how to upgrade the firmware and drivers and/or they don\’t have knowledge on the plethora of settings thay may, or may not, be applicable. Combined with the maze of acronyms and complexity of tuning it is not really surprising this is overlooked or neglected.

Truth is that OS parts belonging to the IO stack should be a combined effort between OS and storage admins. Everything from the file-system downwards is a mutual responsibility between these two people/departments and any actions, configurations, updates or changes in general should be coordinated. Setting related to file-system layout, volume manager configuration, SCSI settings as well as MPIO and HBA have all a severe impact on functionality, performance, managebility and reliability.

So a \”grey area\” should actually be a coordinated area. This way combined efforts and knowledge will improve your overall service to the business.

When I\’m online hit me up on the bottom right side of this screen.

Cheers

Erwin